· Pandas · 5 min read

Handling Null Values in Python Pandas

Intro

In this article we are now looking at how we handle null values in our data. Null or missing values can often be found in real-world datasets, and it is important to properly handle these values in order to accurately analyze and manipulate the data. Null values can arise for a variety of reasons, such as incomplete data collection or data corruption. If left unhandled, null values can introduce errors or biases into your analysis and lead to incorrect or misleading results. Therefore, it is essential to identify and address null values before proceeding with any data analysis tasks.

We just finished correcting all of our data types in this series. You can read about that here.

Learn about the different data types in Pandas, the powerful Python library for data analysis. Discover how to work with numerical, categorical, and textual data, as well as dates and times.

Checking For Null Values

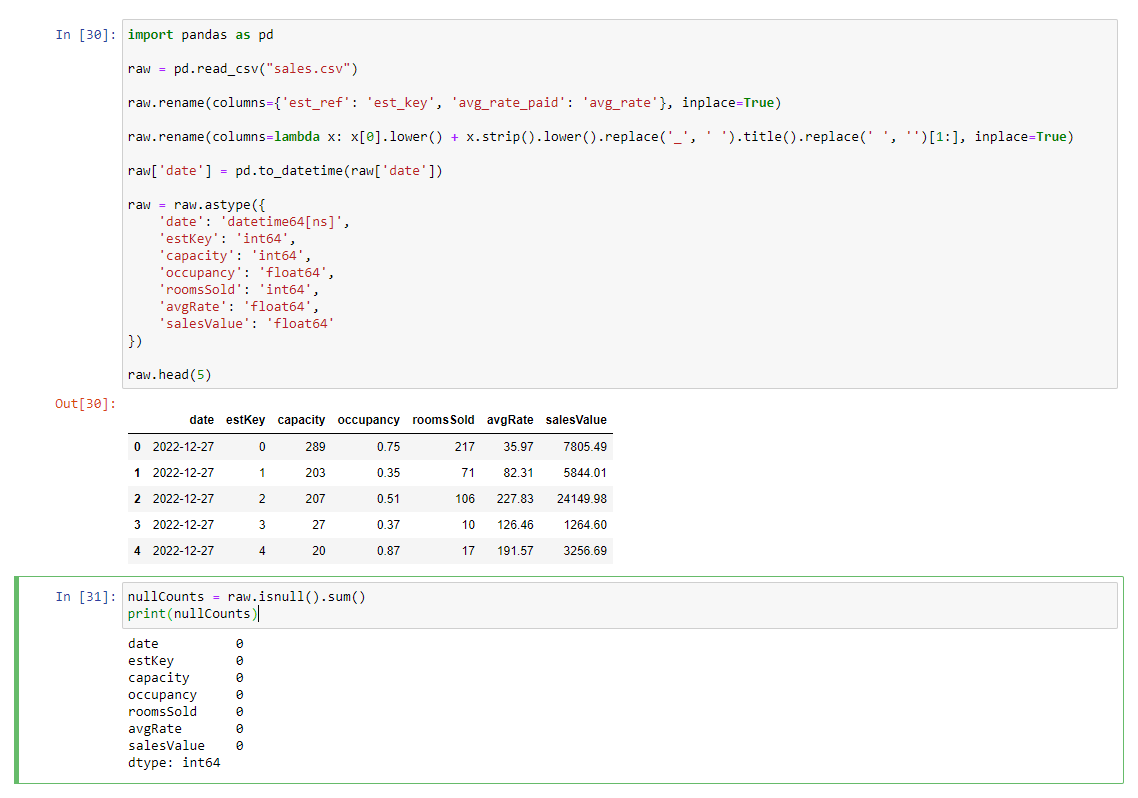

We can quite simply count Null Values within a pandas dataframe to see what we’re working with.

nullCounts = raw.isnull().sum()

print(nullCounts)

I’ve created a new block within the Jupyter Notebook for this code. You should have something that looks like this now.

You can see our result is great. We have 0 null values. Not great for our example, however we should be handling null values as a best practice anyway. Depending on the analysis that we’re doing, we might want to handle these in different ways, and we will go through them.

Result

| Column | Null Count |

|---|---|

| date | 0 |

| estKey | 0 |

| capacity | 0 |

| occupancy | 0 |

| roomsSold | 0 |

| avgRate | 0 |

| salesValue | 0 |

Removing Null Values

If we have columns that are extremely important, then we might want to remove rows of data where we have a null value in certain columns. In this example, we really can’t do anything with the data if it doesn’t have a date, or an estKey

Using dropna() to remove values

nullCounts = raw.isnull().sum()

clean = raw.dropna(subset=["date", "estKey"])

clean.head()

Notice I’ve assigned a new variable clean. Our data is not yet clean, however now that we are manipulating the data, removing rows etc it is no longer raw. Our raw data with the correct columns and types may be useful, and we will leave it so that it is available in the future.

An example of this being useful is that maybe these values are not useful for our analysis, but if you’re looking at raw sales data, and you can’t attribute sales to a date or establishment, it will still be good to see the volume of sales we’re talking about here, as there could be impact of this.

Stages of data cleanliness

The stages of cleaning and preparing data are extremely important, and actually we are going through them as part of this course. You can read more about them here

Effective data analysis relies on clean, consistent, and well-structured data. In this post, we explore the different stages of data cleanliness, from raw data to production-ready data

Calculating Null Values

There are times where maybe we can calculate our Null values by using other columns we have available. In our example, the avgRate can be calculated by taking salesValue / roomsSold. Occupancy can be calculated by taking capacity / roomsSold

It’s unlikely we would have null values like this and then also the columns to correct them in the same dataset. You will usually need to join other tables to make this happen, and we will cover joins soon. For this tutorial though, let’s implement the code to make this happen. The first thing I’m going to do is extend our dropna() to also include the columns, salesValue, roomsSold and capacity. If we don’t have these values then we can not calculate the others.

Adding to the dropna()

nullCounts = raw.isnull().sum()

clean = raw.dropna(subset=["date", "estKey", "roomsSold", "capacity", "salesValue"])

clean.head()

Calculating values

Now we can use the following code to replace Null values in average rate and occupancy. I’m ensuring to round the value to 2 decimal places so that it’s consistent with the rest of the rows of data.

nullCounts = raw.isnull().sum()

clean = raw.dropna(subset=["date", "estKey", "roomsSold", "capacity", "salesValue"])

if clean["avgRate"].isnull().any():

clean["avgRate"] = clean["salesValue"] / clean["roomsSold"]

clean["avgRate"] = clean["avgRate"].round(2)

if clean["occupancy"].isnull().any():

clean["occupancy"] = clean["roomsSold"] / clean["capacity"]

clean["occupancy"] = clean["occupancy"].round(2)

clean.head()

Breaking this down

- The isnull function is called on the “occupancy” column of the “clean” dataframe. This returns a new series of boolean values, where each value is True if the corresponding element in the “occupancy” column is null, and False otherwise.

- The any function is called on the resulting series of boolean values. This returns a single boolean value that is True if any of the values in the series are True, and False otherwise.

- The if statement checks if the boolean value returned by the any function is True. If it is, the code inside the if statement will be executed. If it is False, the code inside the if statement will be skipped.

It’s OK to do this here, as we are calculating the avgRate exactly. Sometimes we can’t quite get the exact number like we can here and it’s important to understand the impact it could have on your analysis of using an estimate. You have to decide is it better to work with slightly incorrect data or an estimate, or to simply not include that data at all.

Conclusion

We have only covered a couple of methods here. There are other ways of handling null values however these are the most common ones.

In this guide, we will go over some key functionality of Jupyter notebooks that are essential to learn.